The design, management, and deployment of electrical power and compute infrastructure are becoming inseparable in the era of AI data centers. Power, compute, and cooling components are increasingly planned and optimized as one integrated system. However, due to the high-density compute racks that are required for AI workloads, the power components have to be disaggregated to make space for more densely packed servers — and they have to deliver more power more efficiently than before. From nominal power draw alone, a megawatt-scale GPU rack produces about 50 times more heat than a fully packed CPU rack.

In come the “sidecars”

The Open Rack v3 (ORv3) standard developed by the Open Compute Project (OCP) was designed to support higher power densities and enable advanced features such as liquid cooling in flexible, scalable racks that could accommodate evolving AI and HPC workloads and are compatible with a wide range of IT equipment. In this scenario, each integrated rack is a “unit.” But 1 MW racks are the evolution that’s breaking the paradigm.

Originally designed to support 18 kW to 36 kW per rack, ORv3 racks remain the gold standard for many workloads, but they were not intended for the megawatt racks on the horizon. Planning for 1 MW IT racks pushes the limits of standard ORv3 specifications, necessitating significant design modifications and the use of sidecars to manage power and cooling requirements.

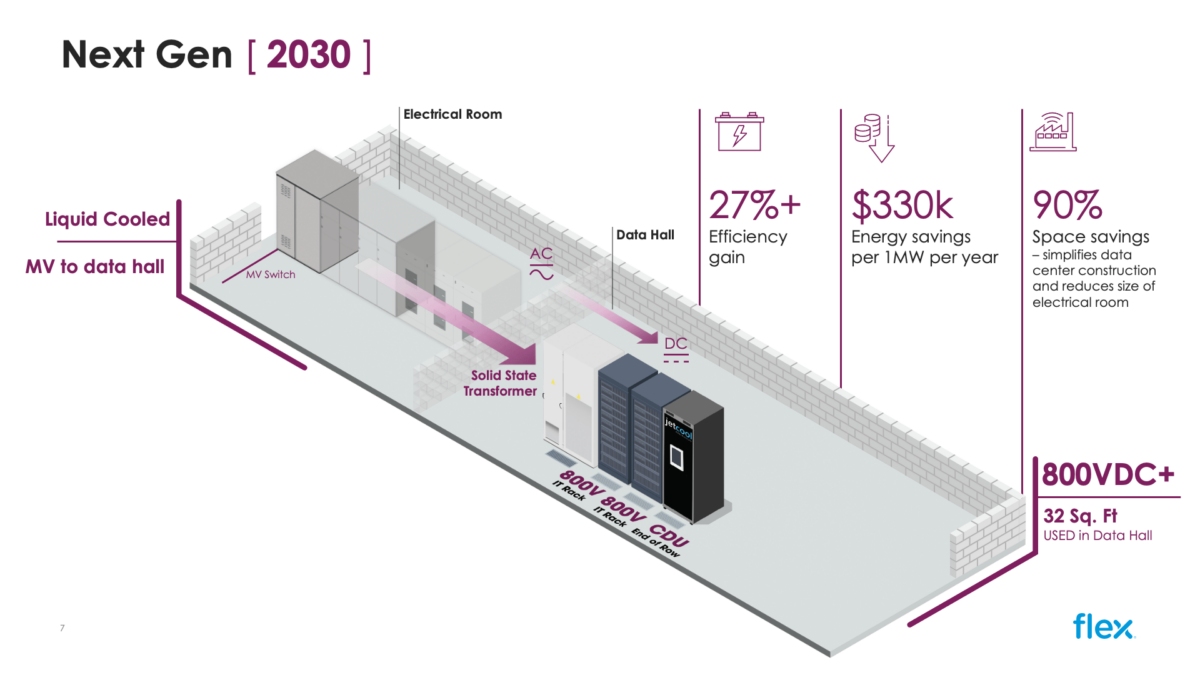

That’s why some hyperscalers are now collaborating to develop Mt. Diablo standards under the aegis of the OCP, such as a new specification for power racks that supports high voltage DC power infrastructure and 1 MW IT loads per rack — and disaggregates power, cooling, and IT to enable more of each. Instead of individual racks being largely independent systems, the coolant distribution unit (CDU), power distribution unit (PDU), and IT rack (or racks) become the collective unit enabling workloads. CDUs and PDUs are the IT rack’s “sidecars.” The new Flex AI infrastructure platform is a prime example, unifying power, cooling, and compute into pre-engineered, modular reference designs for next-generation data centers.