The insatiable demand for AI and high-performance computing (HPC) is driving the need for more data center capacity. New data center builds continue, but there are challenges. Land is a key consideration, especially given the parameters that govern preferred data center locations — access to reliable power, sufficient broadband infrastructure, water for cooling, proximity to end users, and applicable zoning and permitting. Taken together, these factors are driving innovation as data center operators rethink how vast interior spaces are configured. The real estate adage “location, location, location” is as much about what’s inside the building as where it’s sited.

Beyond compute capacity: power and cooling as determining factors

Whether standing up new data centers or retrofitting existing ones, more compute in less space (higher density) is the goal. But is that feasible given the inordinate amount of energy required to power modern data centers?

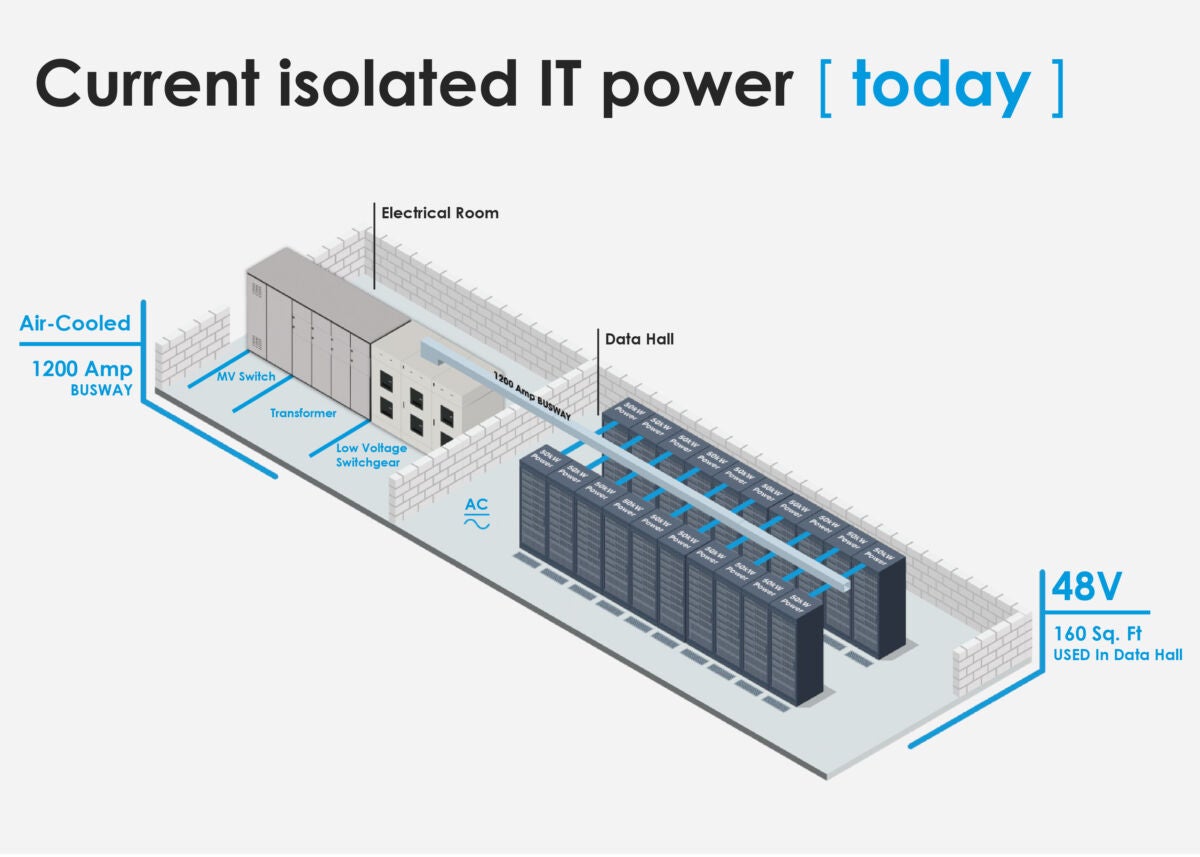

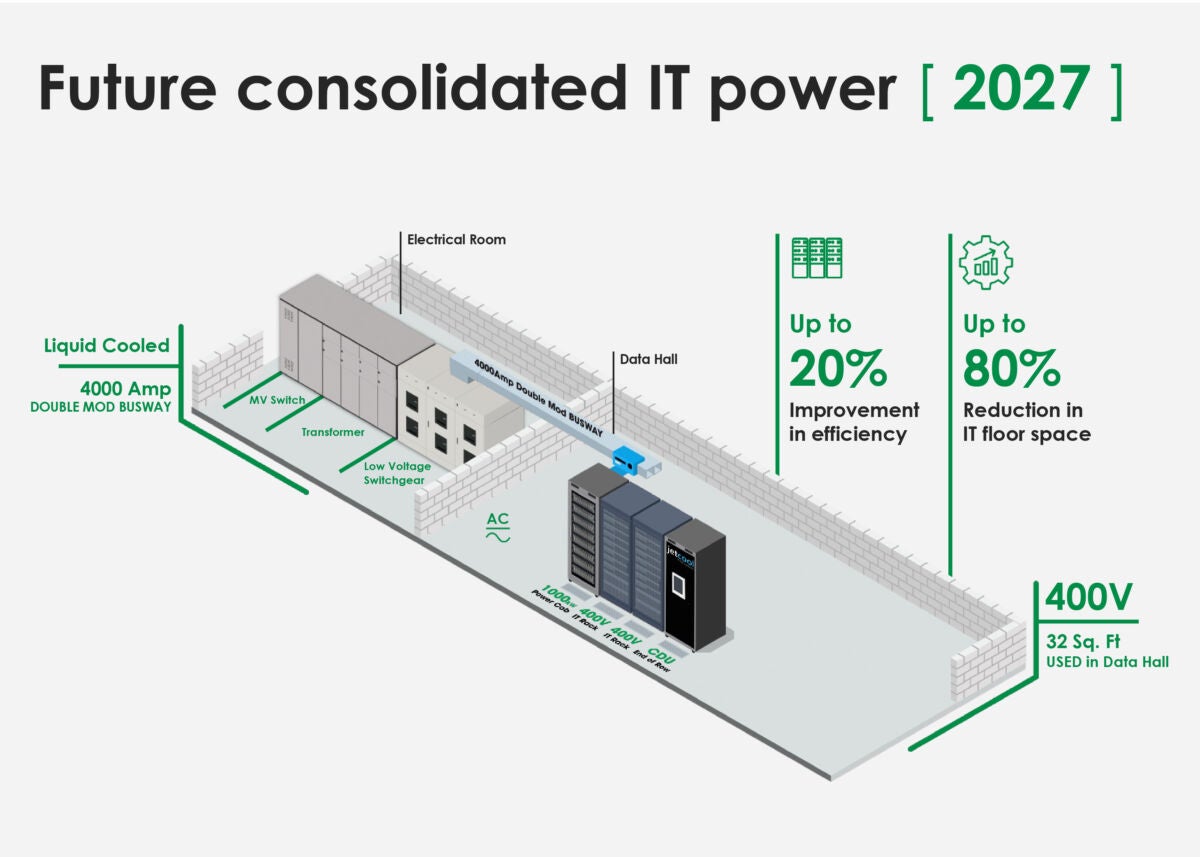

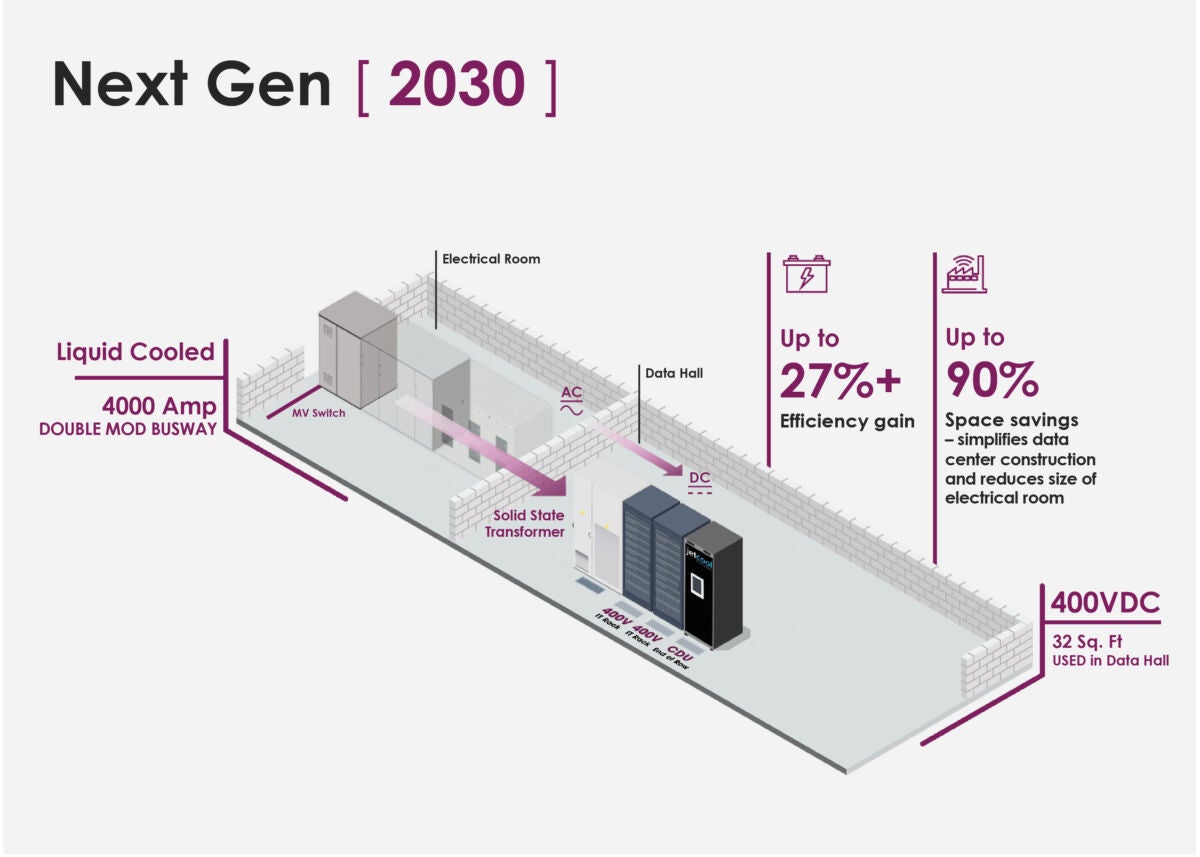

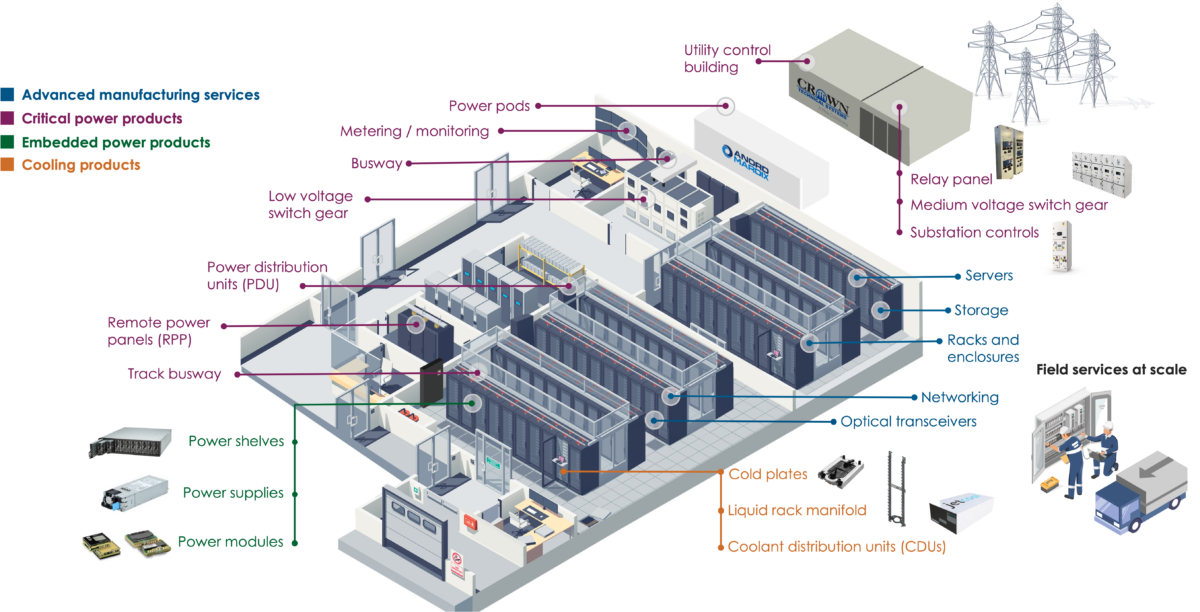

Traditionally, the ratio of grey space to white space in the data center has been approximately 1:1, according to the RICS Construction Journal. This means that for every square foot devoted to electrical and cooling equipment — the grey space occupied by switchgear, uninterrupted power source (UPS) transformers, computer room air conditioning (CRAC) units, chillers, etc. — there’s a square foot of white space reserved for IT equipment such as servers, storage, and networking devices. Hyperscale data centers often target closer to a 1:3 ratio, with more floor space given to compute. The precise proportion is influenced by a number of operational factors, including:

- Power density — Power consumption starts at the chip. Central processing units (CPUs), handle a wide variety of computing tasks and operate at 150W–300W. Accelerators such as graphics processing units (GPUs) designed for the massive parallelism widely used in AI require 10 times the power of CPUs, operating at up to 1,500W and increasing. Data centers that consume more power per square foot typically need more floorspace for electrical and cooling infrastructure.

- Cooling technology — As compute density ramps up, data centers heat up. Just a few years ago, typical server racks were designed to deal with 10kW of energy. Now, most can handle 40kW–60kW, and hyperscalers are preparing for 1+ MW. At that level of performance, will generate 20 times more heat than previous. Cooling infrastructure can push the grey-to-white-space ratio above 1:1 as increased ducting and more CRAC systems are required for air cooling (useful up to about 50 kW) or more plumbing, pumps, and containment systems are needed for liquid cooling.