Has bespoke given way to standardization?

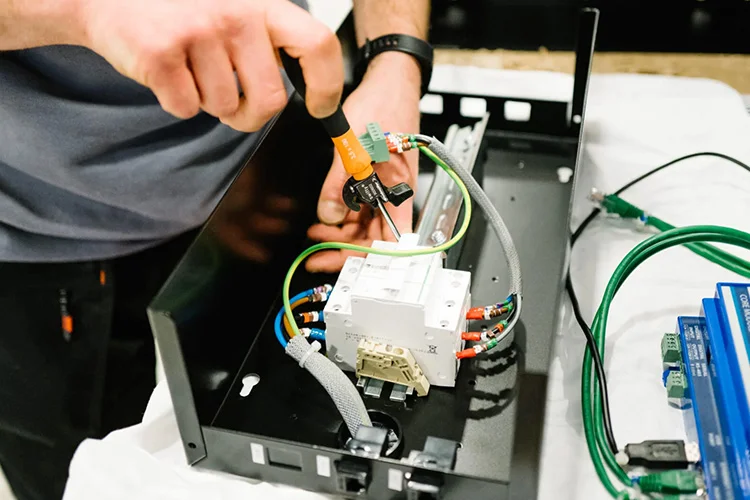

We still build to specific customer needs and regional requirements, but as we’ve seen through efforts such as the Open Compute Project (OCP), standardization, modularization, and replication are the fastest way to scale. All of our customers are talking to me about building blocks like CDUs, PDUs, and power pods — preconfigured, pre-commissioned, pre-tested, and pre-validated systems that unlock the scale they need.

What’s the unsung hero in all this?

Speed to deployment. Once the site is selected and power access is secured, the timeline for a hyperscale data center build averages about 18 months, and every data center operator would shorten that if they could. So, what happens after manufacturing is incredibly important, from right place/right time delivery through commissioning, inspection, and energization. The more we can do in the factory, the faster they can move on site. What if we could get it down to 30 to 60 days? Perhaps the “sizzle” isn’t just the technology. It’s the ability to reduce the time it takes for our data center customers to become operational.

It’s one reason you see Flex continually expanding our manufacturing capacity by repurposing space, leasing facilities, acquiring complementary companies, and forging strategic partnerships. Building agile, resilient supply chains is a big part of that equation, too.

This is such a rapidly evolving industry. What else are you thinking about in terms of the future state of data center power?

Grid constraints aren’t going away anytime soon, and data center operators are exploring onsite power generation beyond backup generators to ensure performance, reliability, and scalability. How will onsite nuclear modules, solar/wind/geothermal microgrids, natural gas turbines and other energy sources affect the data center power infrastructure and its interaction with the grid? Do they eliminate or exacerbate equipment shortages?

At Flex, we’re primarily focused on the management and transmission of power rather than how it’s generated, but we have experience in utility power that goes well beyond a standard data center deployment and are excited to be able to harness that broad knowledge base in these industry discussions.

If there’s one thing missing from this capacity build-out equation, what is it?

Workforce preparation. We’ve all heard about the shortage of electricians, plumbers and other tradespeople needed to build capacity, but few are talking about the people needed to inspect and commission projects or troubleshoot power quality and efficiency issues, among other skills. And there aren’t a lot of people who understand the next-gen +/- 400 VDC and 800 VDC power architectures, either. Preparing for the future isn’t just about technology. It’s about talent. There are rewarding careers in the power field. We need to create a path for people. I think it’s a really exciting time in a great industry.