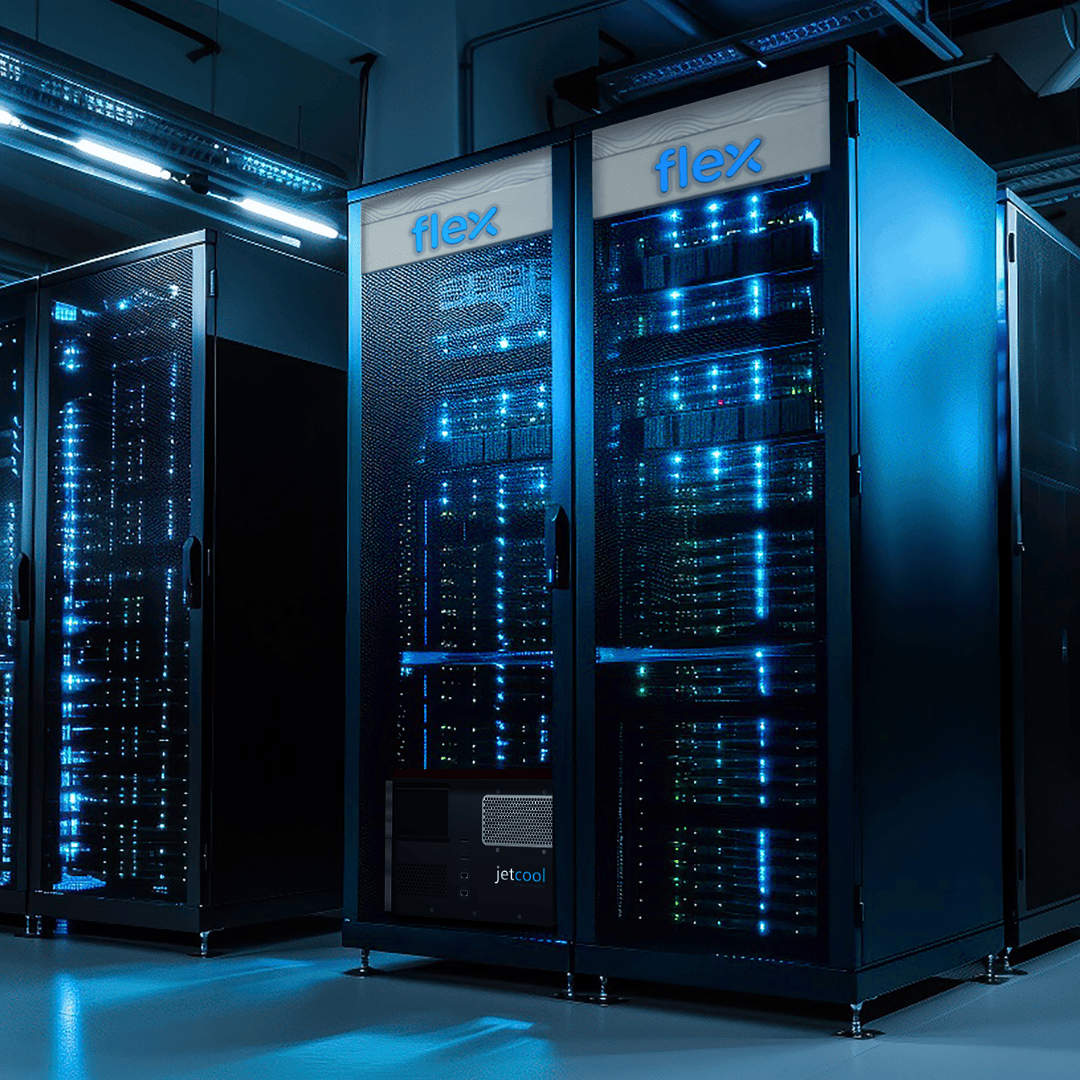

Der Wandel hin zu leistungsstarken KI-Workloads ist da und erfordert sichere, skalierbare und integrierte Infrastrukturlösungen – einschließlich fortschrittlicher Flüssigkeitskühlungen, die die enorme Wärmeentwicklung dichter, stromhungriger Megawatt-Racks bewältigen können. Kühlverteilungseinheiten (CDUs) sind wichtige Schutzeinrichtungen für Server, Prozessoren und andere wärmeerzeugende Geräte in modernen Rechenzentren.

In diesem eBook erfahren Sie, wie Sie die Leistung dieser kritischen Rechenzentrumskomponenten bewerten, um zu bestimmen, welche CDU für Ihre Anwendung geeignet ist, und wie die JetCool SmartSense CDU im Hinblick auf Effektivität, Zuverlässigkeit und Eignung für bestimmte Kühlanforderungen abschneidet.

- Kühlleistung

- Annäherungstemperaturdifferenz

- Durchflussrate

- Druckhöhe

- Kühllastdichte

- Temperaturbereich

Leistungsstarke Workloads erfordern Flüssigkeitskühlung in Rechenzentren

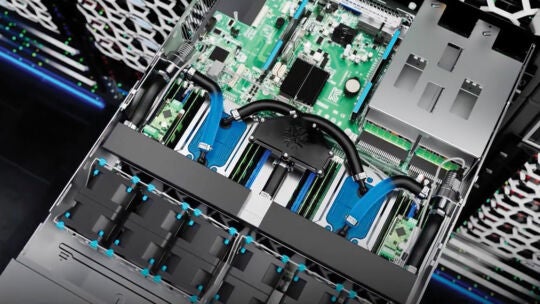

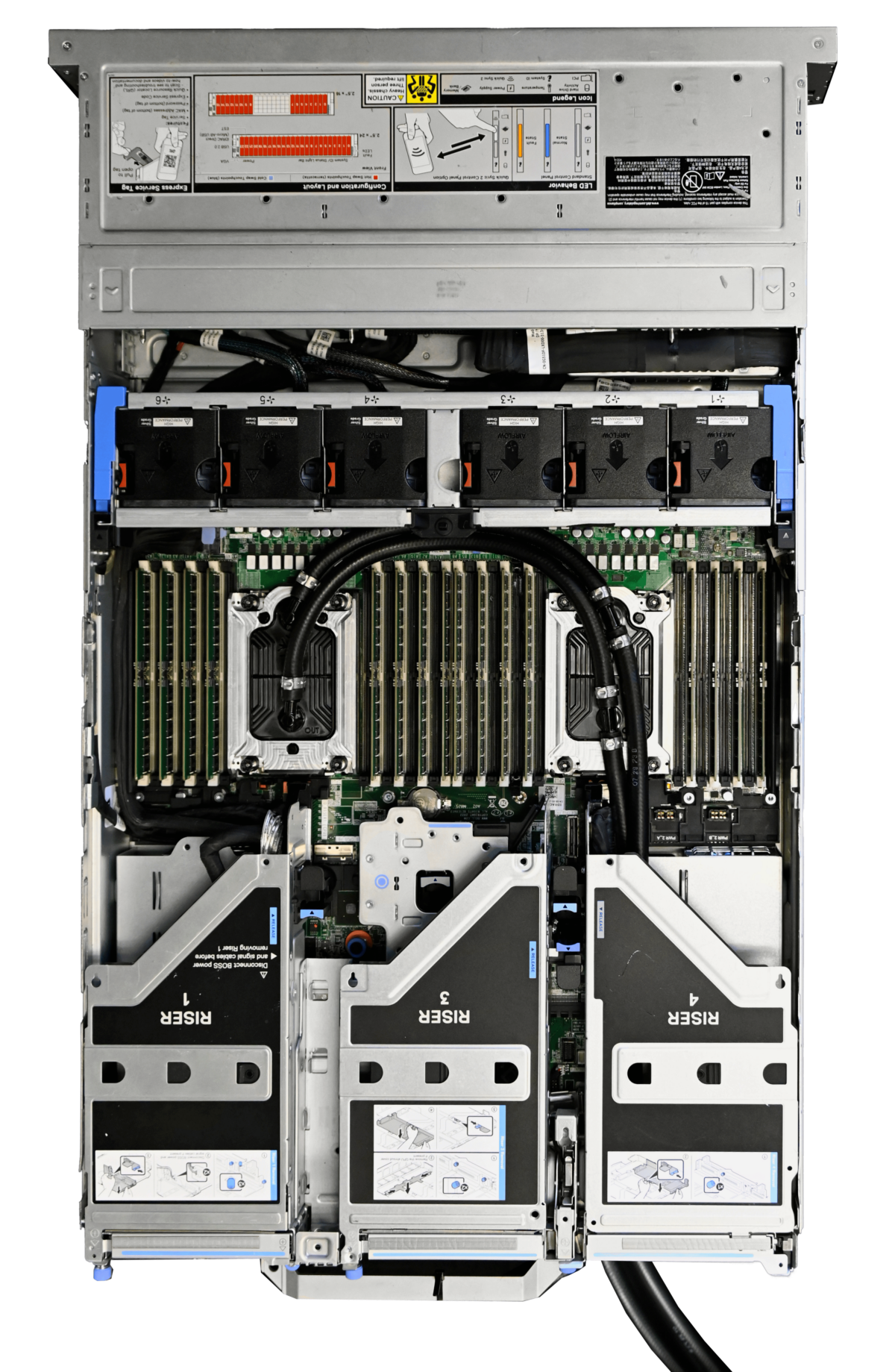

Da die Rechenleistungsanforderungen durch generative künstliche Intelligenz (GenAI), High-Performance Computing (HPC) und Cloud-Workloads steigen, kann die herkömmliche Luftkühlung in Rechenzentren die thermischen und Energieeffizienzanforderungen des beschleunigten Computings zunehmend nicht mehr erfüllen. Moderne Prozessoren und KI-Beschleuniger erzeugen deutlich mehr Wärme, insbesondere da die Rack-Dichte 100 kW pro Rack übersteigt. Die Einführung von GPUs der nächsten Generation, wie z. B. NVIDIA Blackwell B200 Tensor Core GPU mit einer thermischen Auslegungsleistung von 1.200 W ist eine Flüssigkeitskühlung erforderlich, um diese GPUs innerhalb einer sicheren Betriebstemperatur zu halten.

Dies gilt insbesondere für große KI-Server-Installationen, bei denen der Wärme- und Energiebedarf die vorhandene Infrastruktur an ihre Grenzen bringt. Zum Beispiel die NVIDIA NVL72 Rack-System, das auf der B200-GPU basiert, benötigt heute 120 kW pro Rack, mit einer Roadmap für 600 kW im Jahr 2027. Flüssigkeitskühlung kann mit ihrer weitaus besseren Wärmeleitfähigkeit Wärme effizient aus Racks mit hoher Dichte abführen und ist daher in vielen KI-Rechenzentren die bevorzugte Wahl.